The following short overview of elements that could (and often need) to be optimized in the RL pipeline follows our AutoRL survey. For a concise overview of existing solution approaches to the AutoRL problem, we refer to the survey itself.

What Needs to be Automated?

Designing Tasks

While RL does not require a pre-labeled set of training data, all the elements that generate the data on-the-fly need to be set up accordingly. For example, when interacting with an environment, RL agents need guidance from a reward signal. Designing an informative reward signal that enables RL agents to learn desirable behaviors is often not trivial.

Similarly, designing the features describing the state of the environment an agent is occupying often requires careful thought. If most of the individual states are virtually indistinguishable, even the best reward signal can not hope to provide good guidance to an agent. Choosing the set of admissible actions which an agent should learn to use also can dramatically impact the learning abilities of agents. For example, a discrete action space with many irrelevant actions requires large amounts of exploration before finding the “needle in the haystack” action that will ultimately lead to large rewards.

Thus, when aiming to automate the RL pipeline, it is already crucial to think of how to set up environments, or even automate the design of such, to train maximally performant agents. Our groups have various works that automate this element of the RL pipeline or propose novel benchmarks to give a high degree of freedom in the environment design.

Which Algorithm to Use

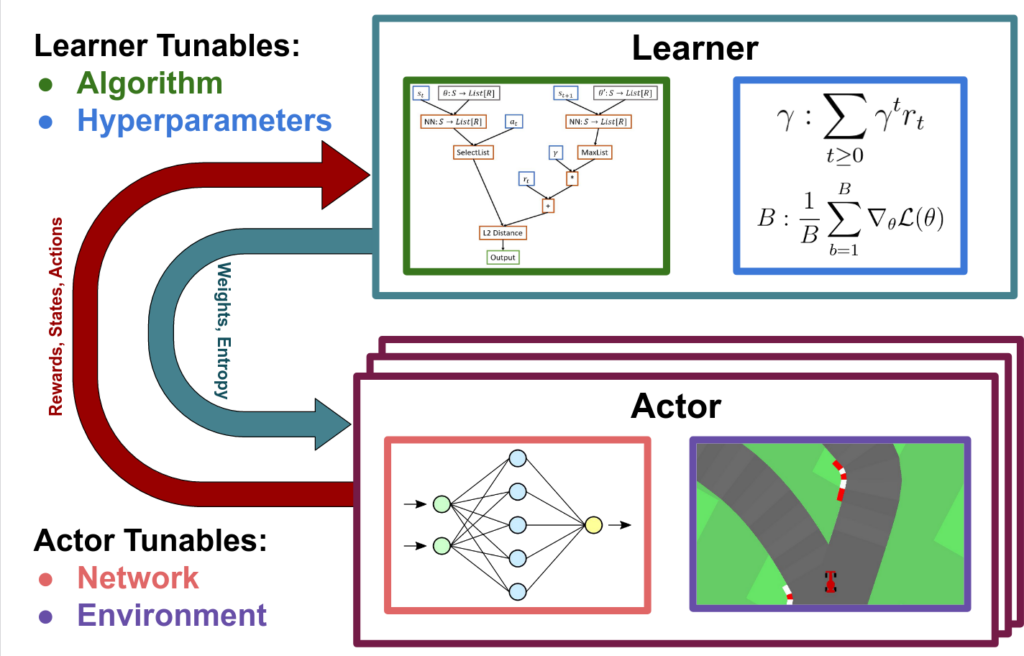

The choice of which algorithm to use is often a tough one to make. Over the last several years a variety of RL algorithms have been proposed that exhibit various strengths and weaknesses. While simple knowledge about the target environment can already help in choosing your algorithm (e.g., all RL algorithms are typically tailored to either discrete or continuous action spaces, but not both) it is often not enough to infer the correct choice of algorithm to use. To make matters worse, there are many implementations that differ only in minute details and cause the “same algorithm” to have drastically different behavior. Thus, RL enthusiasts have often employed an RL algorithm simply because of its good performance on (what are likely) unrelated problems. Using meta-learning techniques from the AutoML realm can help alleviate this issue by providing a principled way of deciding or studying which RL algorithms to use for particular environments.

The Choice of Architectures

Having chosen the learning algorithm is unfortunately not enough. Nowadays, most if not all RL algorithms use deep neural networks to learn approximations of the expected reward that will be achieved by a policy. Thus, choosing the right network architecture can have a huge impact on the learning abilities of agents. A well known example from the RL literature contrasts the “Nature DQN” (which first showed the strength of deep RL methods) with the “IMPALA-CNN”. The latter has gradually replaced the former due to exhibiting better performance with different algorithms, without having drastic differences. Often however, the cost of the RL problem itself has stopped researchers from evaluating other alternatives. Methods from AutoML, and in particular NAS, can help in searching and ultimately finding architectures that are better suited for RL. This choice can even be made or altered while the RL agents are learning.

Hyperparameters

Last but not least, RL agents have a variety of hyperparameters. Well known hyperparameters deal with trading off the exploration-exploitation of the agent or how the networks themselves are updated (think choice of optimizers or batch sizes). This element of the RL pipeline is likely the most studied so far and has spawned many solution approaches. One interesting challenge for the AutoML community here is that RL agents change their data distribution while learning. Thus, contrary to most supervised learning settings, it is very likely that a large portion of hyperparameters need to be changed while the agent is training. AutoML by itself already provides many methods to deal with static hyperparameter choices but only a few with dynamically changing hyperparameters that change during the learning process. To deal with the changing data distributions inherent in RL, there need to yet be more methods proposed that can dynamically adapt hyperparameters and the future outlook in our research is rich in potential.

Reference

Jack Parker-Holder, Raghu Rajan, Xingyou Song, André Biedenkapp, Yingjie Miao, Theresa Eimer, Baohe Zhang, Vu Nguyen, Roberto Calandra, Aleksandra Faust, Frank Hutter, and Marius Lindauer

Journal of Artificial Intelligence Research (JAIR), 74, pp. 517-568, 2022

https://www.jair.org/index.php/jair/article/view/13596