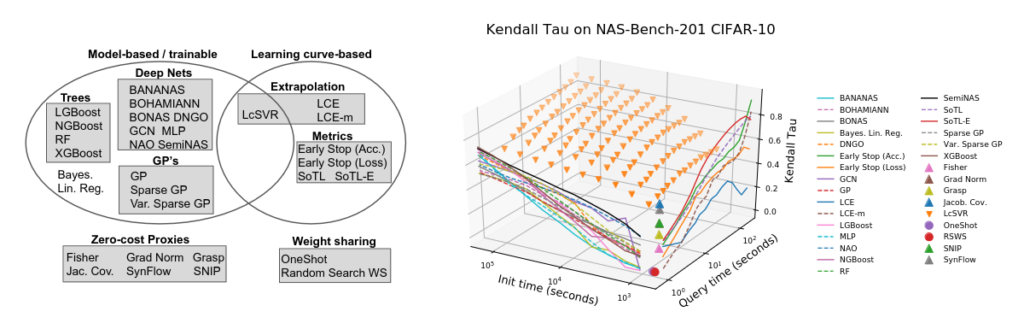

Fast performance estimation is crucial in Neural Architecture Search (NAS), due to the large training costs associated with neural networks. Performance predictors in NAS take as input an architecture (encoding) and learn to predict some metric of interest (e.g. accuracy, latency, etc.). In NASLib, we have implemented and compared 31 different performance predictors, including learning curve extrapolation methods, weight sharing methods, zero-cost methods, and model-based methods. We have tested the performance of the predictors in a variety of settings and with respect to different metrics.