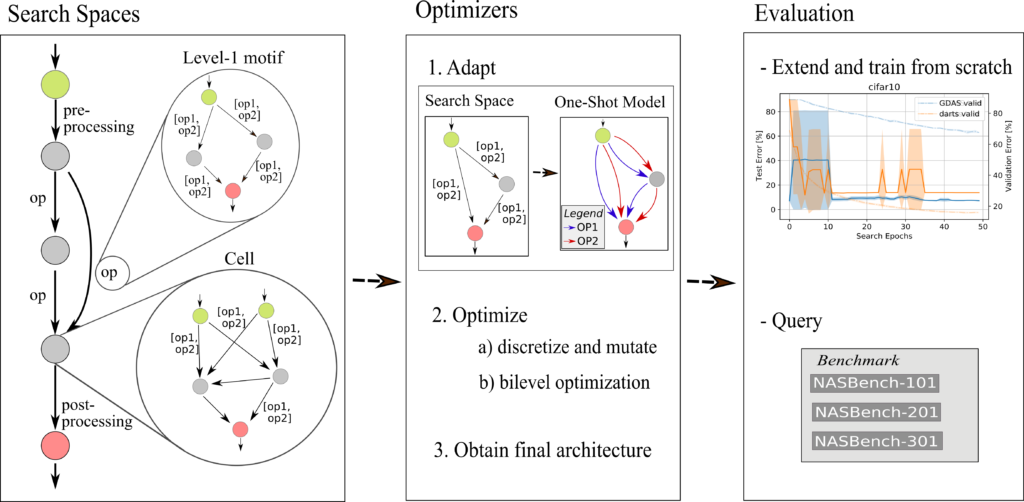

Neural Architecture Search (NAS) is one of the focal points for the Deep Learning community, but reproducing NAS methods is extremely challenging due to numerous low-level implementation details. To alleviate this problem we developed NASLib, a NAS library built upon PyTorch. This framework offers high-level abstractions for designing and reusing search spaces, interfaces to benchmarks and evaluation pipelines, enabling the implementation and extension of state-of-the-art NAS methods with a few lines of code. The modularized nature of NASLib allows researchers to easily innovate on individual components (e.g., define a new search space while reusing an optimizer and evaluation pipeline, or propose a new optimizer with existing search spaces). As a result, NASLib has the potential to facilitate NAS research by allowing fast advances and evaluations that are by design free of confounding factors. The current API allows defining a search space and NAS optimizer, together with the search and final evaluation loop in a few lines of code:

The current API allows defining a search space and NAS optimizer, together with the search and final evaluation loop in a few lines of code:

>>> search_space = SimpleCellSearchSpace()

>>> optimizer = DARTSOptimizer(config)

>>> optimizer.adapt_search_space(search_space)

>>> trainer = Trainer(optimizer, config)

>>> trainer.search() # Search for an architecture

>>> trainer.evaluate() # Evaluate the best architecture

If you are more interested in NASLib, check out our open-source repository at: