By

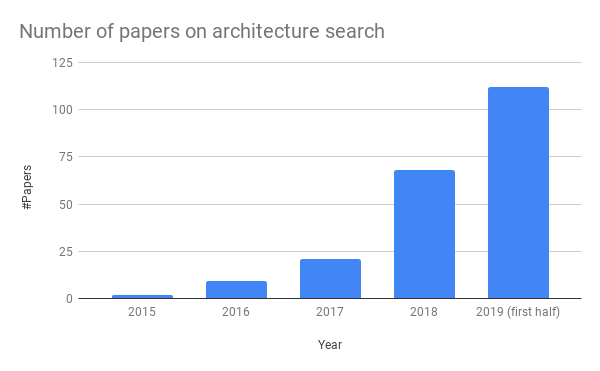

Based on our NAS literature list

Neural architecture search (NAS) is currently one of the hottest topics in automated machine learning (see AutoML book), with a seemingly exponential increase in the number of papers written on the subject, see the figure above. While many NAS methods are fascinating (please see our survey article for an overview of the main trends and a taxonomy of NAS methods), in this blog post we will not focus on these methods themselves, but on how to evaluate them scientifically.

Although NAS methods steadily improve, the quality of empirical evaluations in this field are still lagging behind compared to other areas in machine learning, AI and optimization. We would therefore like to share some best practices for empirical evaluations of NAS methods, which we believe will facilitate sustained and measurable progress in the field.

We note that discussions about reproducibility and empirical evaluations are currently taking place in several fields of AI. For example, Joelle Pineau’s keynote at NeurIPS 2018 showed how to improve empirical evaluations of reinforcement learning algorithms, and several of her points carry over to NAS. Another example is the annual machine learning reproducibility challenge, which studies the reproducibility of accepted papers (e.g., at NeurIPS). For the NAS domain, Li and Talwalkar also recently released a paper discussing reproducibility and simple baselines.

Following the reproducibility checklist by Joelle Pineau, we derived a checklist for NAS based on the best practices we discuss in detail in our research note on Arxiv. That note has all the details, but in a nutshell the best practices we propose are as follows:

Best Practices for Releasing Code:

- Release Code for the Training Pipeline(s) you use

- Release Code for Your NAS Method

- Don’t Wait Until You’ve Cleaned up the Code; That Time May Never Come

Best Practices for Comparing NAS Methods

- Use the Same NAS Benchmarks, not Just the Same Datasets

- Run Ablation Studies

- Use the Same Evaluation Protocol for the Methods Being Compared

- Compare Performance over Time

- Compare Against Random Search

- Validate The Results Several Times

- Use Tabular or Surrogate Benchmarks If Possible

- Control Confounding Factors

Best Practices for Reporting Important Details

- Report the Use of Hyperparameter Optimization

- Report the Time for the Entire End-to-End NAS Method

- Report All the Details of Your Experimental Setup

Furthermore, we propose two ways forward for the community:

- The Need for Proper NAS Benchmarks

- The Need for an Open-Source Library of NAS Methods

Overall we propose 14 best practices to facilitate research on neural architecture search and two important steps forward for the community. Although we would love to see that all future papers on NAS will strictly follow our best practices, we are fully aware that this won’t always be feasible and that the community has to gradually strive for them as guidelines.

You can find our checklist at automl.org/NAS_checklist and the paper with all details at Arxiv. We would appreciate if the community would share with us further insights on scientific best practices for neural architecture search; either here in the comments, on twitter (@AutoMLFreiburg) or per email.

Edit: to make it easy to include this checklist as an appendix in your papers, here is the NAS checklist as a .tex file.