At the risk of sounding cliché, “with great power comes great responsibility.” While we don’t want to suggest that machine learning (ML) practitioners are superheroes, what was true for Spiderman is also true for those building predictive models – and even more so for those building AutoML tools. Only last year, the Netherlands Institute for Human Rights came to a landmark interim decision that anti-cheat software used by universities during the pandemic is likely to be discriminatory. These ‘proctoring’ tools often include some type of face recognition algorithm, which are notorious for exhibiting lower accuracy for people with darker skin tones compared to lighter skin tones, as illustrated in the seminal work “Gender Shades” by Buolamwini and Gebru. Sure enough, a dark-skinned student was unable to begin her exam due to a “face not found” error, but eventually had to resort to shining a bright light directly on her face to resolve the issue. This is only one of many examples that shows how people can be harmed by machine learning systems – particularly people who belong to already marginalized populations.

If you have some time, you can also check out the NeurIPS tutorial “Beyond Fairness in Machine Learning” or Cathy O’Neill’s talk about her book “Weapons of Math Destruction” to learn about more issues in machine learning applications. If you have a bit less time, have a look at the following examples to learn more about the negative impact of unfair machine learning systems deployed in the real world [1][2][3].

Clearly, there is a need to incorporate principles of fairness into ML systems. And this should be consequently reflected by automated machine learning (AutoML) tools (you can find our introduction to AutoML here) being equipped with techniques that enable and allow responsible usage. The field of AutoML increasingly focuses on incorporating objectives other than predictive performance, such as model size or inference time. It seems only natural to ask whether it would be possible to incorporate fairness objectives as well. Indeed, over the last few years, several researchers have proposed to jointly optimize predictive performance and fairness using a multi-objective or constrained hyperparameter optimization strategy and even introduced the term “Fair AutoML”.

This raises important questions. What role can AutoML play in prioritizing fairness in ML systems? For example, if the developers of the proctoring software would have had access to such an AutoML system, could the login struggles of the Dutch student have been prevented? Put differently: can fairness be automated?

To study this question, AutoML and fairness researchers teamed up, resulting in our recent paper, “Can Fainess be Automated? Guidelines and Opportunities for Fairness-Aware AutoML”. The short (and perhaps unsurprising) answer: no, fairness cannot be automated. Posing fairness as an optimization problem is usually not enough to prevent fairness-related harm – and can sometimes even be counterproductive. However, we also conclude that, when technical interventions are appropriate, fairness-aware AutoML systems can lower the barrier to incorporating fairness considerations in the ML workflow and support users by codifying best practices and implementing state-of-the-art approaches.

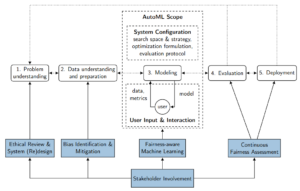

While fairness-aware AutoML can eliminate the need for users to closely follow the technical ML literature, they of course, still need to understand the standard ML workflow shown in the figure on the right and know how to set up a ML task. However, sources of fairness-related harm are not limited to the modeling stage of a machine learning workflow. As a result, both the AutoML system design and the way in which a user interacts with an AutoML system are important factors to consider if we aim to improve fairness. As such, we argue to move away from monolithic one-size-fits all AutoML systems in favor of more interactive, assistive AutoML systems that support users in fairness work.

While fairness-aware AutoML can eliminate the need for users to closely follow the technical ML literature, they of course, still need to understand the standard ML workflow shown in the figure on the right and know how to set up a ML task. However, sources of fairness-related harm are not limited to the modeling stage of a machine learning workflow. As a result, both the AutoML system design and the way in which a user interacts with an AutoML system are important factors to consider if we aim to improve fairness. As such, we argue to move away from monolithic one-size-fits all AutoML systems in favor of more interactive, assistive AutoML systems that support users in fairness work.

We are very excited about this topic and hope that our paper will be the starting point of bridging the fields of fairness in ML and AutoML to advance the broad application of fairness principles in ML applications. Please check out our full paper in which we discuss how bias and unfairness can be introduced into an AutoML system and propose guidelines for how a fairness-aware AutoML system should be developed, ideally as an interdisciplinary effort. Furthermore, we outline several exciting opportunities and open research questions to be tackled in future research.

You can find the full paper on arXiv.